On June 26, 2025, Software Toolbox hosted a live panel discussion that brought together three industry subject matter experts. They explored how organizations can unify data across legacy and modern systems, securely, efficiently, and without unnecessary disruption. In addition, they discussed in depth what it really takes to create a common data framework that’s not just effective, but scalable and future ready.

From Silos to Insights: What You Need to Know

This blog highlights the key takeaways, where the panel unpacked seven critical areas to focus on when closing data gaps across operations. You’ll discover the most common challenges companies face, why aligning technology with business goals is essential, and how open, standards-based solutions can bridge the gap between old and new, without the high costs and disruption of rip-and-replace approaches.

The insights shared in this conversation are rooted in real-world experience and filled with practical, actionable strategies you can start applying right away, no matter what your industry or location. If you're looking to unlock more value from your industrial data, you're in the right place. Keep reading, and you’ll gain a fresh perspective and a clearer path to smarter, more connected operations.

Curious how the experts approach data integration, scalability, and analytics readiness? Catch the full panel discussion on-demand and get practical strategies you can apply today.

Unlocking the True Value of Industrial Data: Why Silos Still Hold You Back

Massive volumes of data flow through oil and gas operations every day, from SCADA systems and PLCs to historians and cloud platforms. Yet too much of this data remains locked in silos, inaccessible to the people and systems that need it most. These silos slow decision-making, limit visibility, and prevent organizations from unlocking the full value of their information.

So, where do you begin? Let’s start with this important question: What do you see when companies try to unify data from disparate sources?

Solving Data Silos Isn’t Just a Tech Problem

Solving data silos isn’t just a technology problem, it’s a people and process challenge, too. Many companies believe they need to rip and replace their existing systems to achieve success, but that’s not the case. With the right strategy and open standards-based solutions, you can modernize gradually, bridge the gap between legacy and modern technologies, and incrementally generate value over time.

“What really needs to happen is to bridge the gaps between old and new technologies using open standards-based solutions that can evolve over time to enable you to modernize gradually, generating value each step of the way.” - John Weber

Beyond the Org Chart: Why OT/IT Alignment Takes More Than Structure

While the convergence of OT and IT is a popular topic, many companies struggle to make it seamless. Historically, OT and IT teams have operated in silos with different leadership, goals, and skill sets. Even when placed under unified leadership, alignment doesn’t happen automatically. It requires time, intentional effort, and mutual understanding. Often, IT lacks insight into operational field realities, while OT may not fully grasp the strategic concerns of IT, like cybersecurity. Until both sides truly understand each other’s world, meaningful integration will remain difficult. Limited resources and funding, especially in industries like oil and gas, only make this challenge harder to overcome.

“Even when companies bring IT and OT teams under a unified leadership structure, achieving true alignment requires time. It requires an intentional effort, a common understanding.” – Seann Gallagher

Start Where Your Data Starts: Integration Begins in the Field

When integrating data across systems, the starting point should always be the field level, not the cloud. While many projects are driven by cloud or analytics goals, the foundation of all data lies in the field. If bandwidth or data resolution is limited at the source, no amount of cloud software can fix that. Organizations often realize too late that their cloud initiatives are constrained by poor-quality or low-frequency data coming from the field.

“The field level is the foundation of all your data, and that is where you have to start at least evaluate where you are in terms of your field preparedness because no amount of software can create bandwidth where it doesn't exist.” - Scott Williams

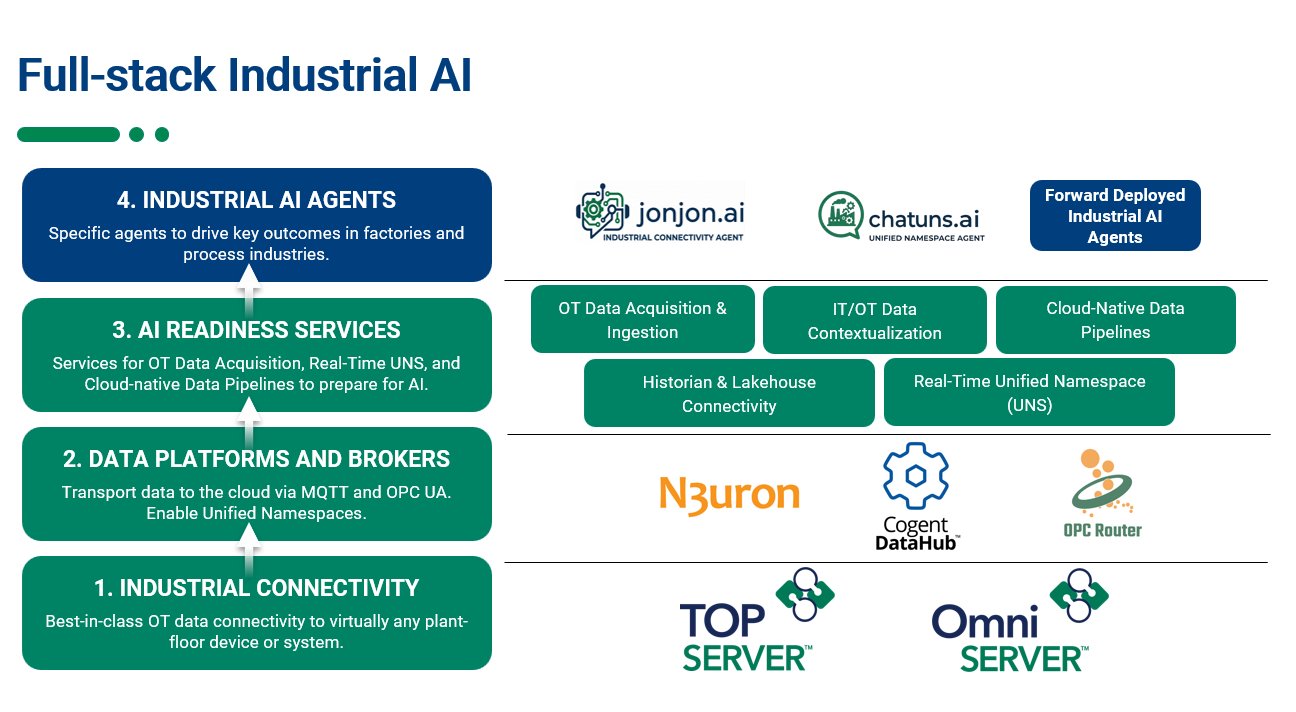

After assessing field readiness and bandwidth limitations, the next step is to evaluate your software stack. Different software solutions vary in compatibility and capability, so it’s important to understand what you have, where it fits, and how it integrates with other systems. In short: start where your data starts—in the field—and build from there.

These are the 7 Essential Areas to Consider When Breaking Down Data Silos

What does it really take to unify data across your enterprise? In our discussion, the panelists broke down seven essential areas that companies must address to successfully integrate data, eliminate silos, and turn raw information into real-time, actionable insights. Whether you're modernizing legacy infrastructure, scaling for future growth, or preparing for analytics and AI, these practical takeaways will help you build a secure, collaborative, and future-ready data framework, one step at a time.

1. Data Integration

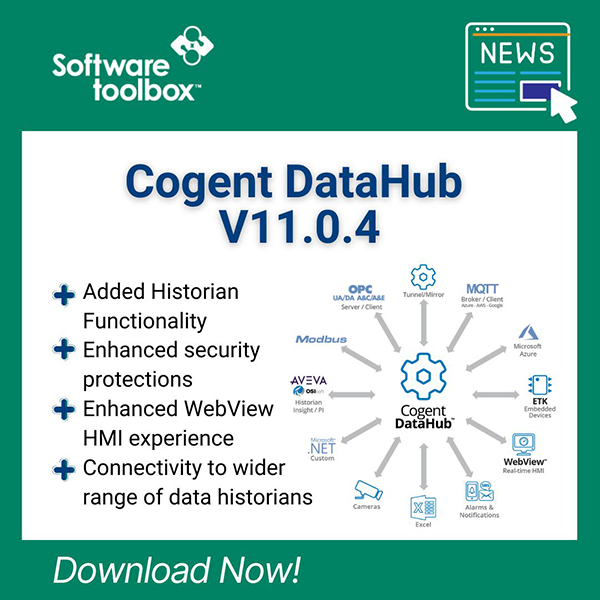

Over the past six to seven years, data integration has become significantly more achievable thanks to the evolution of software tools. Where once custom coding against APIs was the only option, today’s market offers a wide array of off-the-shelf integration tools, including data hubs and platforms built specifically for connecting disparate systems. These tools allow users to pull in data from multiple sources, transform it, and route it where it’s needed, all with far less effort than before.

However, not all software is created equal. Some tools are built for enterprise-scale systems, while others are better suited for smaller environments. Choosing the right tool for the right job is essential — and it’s not just about functionality, but about how well those tools fit into your overall software stack.

One emerging challenge is the rise of software stack silos, where different teams within the same organization adopt separate tools that don’t communicate well with each other. This creates a new kind of fragmentation, even as the data itself may be more accessible. To avoid this, successful integration efforts require cross-team collaboration, careful software evaluation, and a unified approach.

In short, effective data integration today is possible but only when you combine the right tools, protocols, and teamwork. Data integration is easier, but it still requires strategy. Success depends on understanding your data, your use cases, and your software environment, and aligning all three to move data reliably, securely, and at scale.

2. Contextualization

Contextualization is often misunderstood, yet it's one of the most critical components of a successful data integration strategy. It’s not just about collecting and storing data, it’s about making that data understandable, relatable, and usable at every level of the system.

Without proper contextualization, even the most well-structured data lakes or cloud-based analytics platforms fall short. Teams are forced to waste time reverse-engineering the meaning behind data points, figuring out what a tag represents, where it came from, and how to use it, especially when the original architects and engineers are no longer available.

Effective contextualization involves adding metadata and meaning to data as it flows from the field through the enterprise and into the cloud, and even back again. It’s a layered, continuous process that must happen at every stage of the data lifecycle. Context links sensor data to physical assets, ties operational data to business systems, and enables different teams to use the same data confidently for different purposes.

When done right, contextualization reduces time-to-insight, supports predictive analytics, and enables faster, more informed decision-making. As one example, a properly contextualized data model allowed one oil and gas company to identify failure patterns and configure proactive alerts, all in a single day.

In short, contextualization turns raw data into actionable knowledge, and it's essential for scaling data integration and delivering long-term value.

“Contextualization isn't something you just go out and buy. It requires some technology, but it's really that mindset and continuous process all the way through. And as you do that, be aware it's going to drive more thirst for data, but if you're getting results, it's going to be worth it.” – John Weber

3. Data Quality and Reliability

In any successful data integration strategy, data quality and reliability are non-negotiable. Without them, even the most advanced dashboards and analytics tools lose their value—and worse, they risk losing user trust.

At the operational level, many protocols (like OPC) include not just the value of a data point but also timestamp and quality. These quality indicators are critical for letting systems and users know if data is trustworthy. However, as data moves up the stack, from SCADA to enterprise systems to the cloud — this quality information often gets lost, especially if the software tools involved don’t preserve it.

“Quality is not just a metric that gets passed up, but it's also a metric you must measure. And so, you must develop KPIs, you must develop alerts and reports around that data quality.” – Scott Williams

To prevent that loss of context and confidence, organizations must:

- Select tools that retain data quality throughout the integration pipeline.

- Develop KPIs and alerts to actively monitor data quality, resolution, and communication status.

- Treat data as a product, just like oil, gas, or widgets—something to be measured, monitored, and protected.

Why does this matter? Because once users lose trust in a dashboard or report due to inaccurate or inconsistent data, it's extremely difficult (and costly) to regain. By proactively measuring and alerting on data integrity, users can be informed when issues arise, maintaining confidence and keeping operations running smoothly.

In short, reliable data builds trust, and trust is essential for any integration effort to deliver lasting value.

4. Scalability and Flexibility

Scalability and flexibility are essential for data integration in oil and gas, where companies often manage thousands, or even millions of data points across vast operations. To support this scale without creating long-term limitations, two things are critical: a systems-level approach and the use of open standards.

Scalability begins with a clear understanding of your goals, the size and nature of your data, and how it will be used. Choosing the right tools early on and knowing when to transition to more robust solutions as you grow is key to future-proofing your architecture.

“You don't want to get locked into a specific vendor piece of equipment. All it takes is one merger acquisition and you've got mixed systems. One thing to solve it is through open standards. You must make sure you have solutions that use open standards.” – John Weber

Flexibility comes from using open standards and evaluating whether to go with tightly integrated vendor suites or a best-in-class approach for each layer. Each has trade-offs: single-vendor tools may offer easier interoperability but carry risk if that vendor’s direction changes. Best-in-class tools offer modularity and performance but may pose integration challenges.

Ultimately, success comes from matching tools to use cases, planning for future growth, and staying intentional in your design choices. Build smart today to avoid costly rebuilding tomorrow.

5. Collaboration

While data integration often focuses on technology, successful outcomes depend just as much on collaboration—both across vendors and within internal teams.

From a vendor perspective, collaboration means moving beyond finger-pointing to problem-solving. System integrators and solution providers must work together as trusted partners, focusing on the shared goal of delivering results for the end user. Vendors who embrace openness and cooperation, rather than pushing a closed, one-size-fits-all stack, enable more adaptable, successful solutions.

Internally, collaboration between OT and IT teams is just as critical. These groups bring different skill sets, priorities, and experiences. For integration projects to succeed, teams must find common ground, align goals, and share knowledge. OT can provide real-world context from the field, while IT ensures data security, governance, and scalability.

“I think it's important for end users to consider vendors that operate with a willingness to work with other vendors. There's going to be a variety of software in the stack, so you really must make sure that your vendors are willing to work with others, willing to collaborate, work those projects together and not just turn their backs when the end users are trying to create some cross-functional solutions.” – Seann Gallagher

Ultimately, collaboration is the bridge between strategy and execution, technology and outcomes. When teams work together—across companies and departments—they build scalable, future-ready data integration solutions that serve the entire organization.

6. Cybersecurity and Data Governance

Cybersecurity and governance are essential elements of any data integration strategy, and they must be approached as ongoing processes, not one-time purchases.

Security isn’t just about tools and protocols: it’s also about people. Human behavior is often the weakest link in any system, so governance must include training, oversight, and responsible access management. It also means evaluating whether your technologies, suppliers, and integration partners stay current with evolving regulations and security standards, especially in regulated industries like oil and gas.

“Security is a process, not a product. You don't just go out and buy it, you have got to take a holistic approach. So, never forget that sometimes the most insecure thing comes in and out of the door on two legs. And that's something to manage.” – John Weber

Over-securing can also be a risk. While strong security is non-negotiable, overly restrictive measures can damage usability. When systems become too difficult to access, users may circumvent them, undermining the very protection in place.

The key is to strike a balance between protection and practicality, creating secure, compliant, and user-friendly systems that support, not hinder operational goals.

7. Analytics Readiness

Analytics readiness is the final step, but it depends on everything that comes before it. From reliable field data and bandwidth to proper contextualization, integration, governance, and collaboration, every factor contributes to whether your organization is truly prepared to extract value from analytics, AI, and machine learning.

You can’t do advanced analytics without clean, accurate, and complete data, and that requires a common data framework. But you can’t build that framework all at once. You need to start small, define high-impact, achievable use cases, and show quick wins to gain executive support and funding.

“I like to use the phrase: you can't boil the ocean. You're not going to get all the data into a common data framework all at once. So, you've got to start somewhere and define something that can be accomplished in a reasonable timeframe while keeping the overall vision.” – Seann Gallagher

In short, analytics success depends on:

- Starting with clearly defined goals and use cases

- Establishing scalable, standards-based architectures

- Aligning with business needs, not just technological trends

- Building gradually while keeping the bigger picture in mind

Without data readiness, AI is just hype. With it, analytics can drive real, measurable business value, one step at a time.

Practical Steps to Data Integration and Digitalization

In this webinar, you heard our three industry experts share real-world strategies for unifying data across legacy and modern systems without disruption, focusing on seven critical areas: integration, contextualization, data quality, scalability, collaboration, cybersecurity, and analytics readiness. Their insights highlight how to build a scalable, standards-based data framework that aligns with business goals, supports digital transformation, and delivers measurable results.

Watch the Full Panel Discussion Now

Want to hear the full conversation and get practical advice directly from the experts? Watch the on-demand panel discussion webinar: “No More Silos: How to Turn Data into Decisions Across the Oil & Gas Enterprise”

Whether you're in IT, OT, or leadership, this discussion will give you the tools and perspective to start turning data into decisions—without the disruption of rip-and-replace.

Meet the Panelists

Scott Williams – Senior Solutions Consultant, The Integration Group of Americas (TIGA). Scott is a seasoned data strategist, cloud and edge computing specialist, and SCADA systems expert with more than 30 years of experience across various industries. His mission is to help organizations navigate the complexities of Industry 4.0 and enable data-driven decision-making that boosts operational efficiency and performance. At TIGA, he supports clients in enhancing safety, reliability, and productivity through industrial systems integration and SCADA automation, especially in oil and gas and energy sectors.

Seann Gallagher – Customer Success Manager, Software Toolbox. With over 30 years of experience in industrial automation, Seann has played a key role in deploying and supporting large-scale operational data systems including SCADA, historians, and field communication networks. Based in Houston, many of his projects have focused on oil and gas operations. In his current role, Seann works closely with customers and internal teams to ensure organizations maximize the value of their industrial data solutions. He began his career in technical support at a Wonderware distributor, which gave him a strong foundation in industrial software integration.

John Weber – President and Founder, Software Toolbox. John served as moderator of the webinar and brought more than 30 years of experience helping industrial users get more value from their automation systems. As the founder of Software Toolbox, he has worked across industries to deliver solutions that bridge data gaps and simplify complex architectures. John provided both moderation and expert commentary during the panel, drawing from his early career roots in technical support and his passion for demystifying technology for real-world use.