Welcome back to the Summer of IoT Blog Series. In this blog we’ll explore how Cogent DataHub’s External Historian and built-in Store and Forward features ensure efficient data flow without risking network security at the plant or enterprise level.

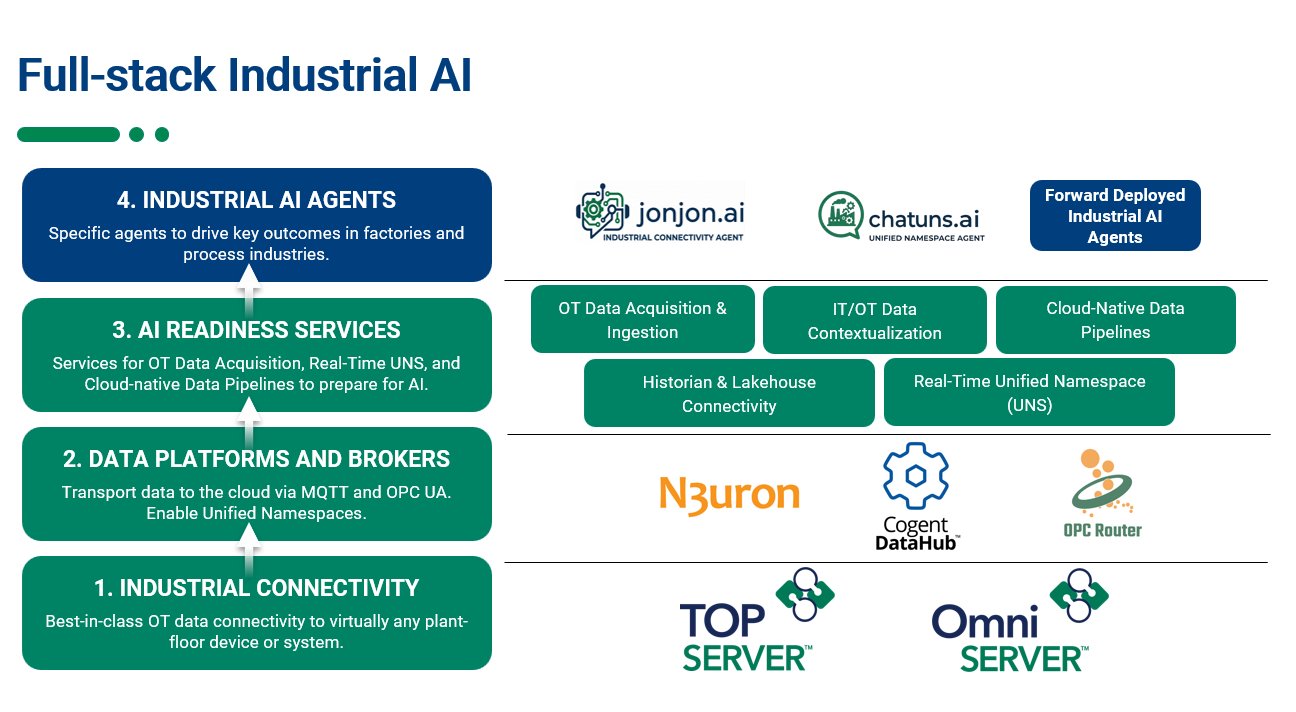

As organizations work toward Industry 4.0 and digital transformation, ensuring secure and reliable OT/IT convergence is essential for breaking down data silos and creating cohesion between your business systems. IoT devices provide real-time data and connectivity to transform traditional industrial operations. Complementing this, historians serve as centralized data repositories that store and organize time-series data from IoT devices and other sources. Together, the data from both fuels advanced analytics, automation, and smart decision-making across the enterprise.

As the industrial landscape increases dependence on interconnected smart operations, the need for robust security measures becomes paramount to protect critical systems from growing cybersecurity threats. That’s where Cogent DataHub’s Secure Tunneling features come in, offering a secure and efficient way to move data without opening inbound ports at the plant or enterprise level.

Real-Time vs Historical Tunneling

Tunneling emerged from concerns about open inbound ports on systems, which poses significant cybersecurity risks. It solves the critical challenge of enabling data exchange in manufacturing without exposing OT or IT systems to cyber threats.

In a previous blog, “There’s more to IT and Why Your IT Team has Concerns”, we discussed in detail how these entry points can be exploited by malicious attackers, leading to unauthorized access, the deployment of malware, and ransomware attacks. This is a key reason why many users have turned to Cogent DataHub, a versatile middleware software solution that addresses these security risks through advanced tunneling capabilities.

Cogent DataHub for real-time data enables the ability to securely tunnel real-time data across a network. This type of tunneling configuration updates data as it is generated by the source, in “real-time". This is used for immediate insight and operational control of your system with the added benefit of completely avoiding DCOM and Windows security challenges when remote OPC DA connections would otherwise be necessary.

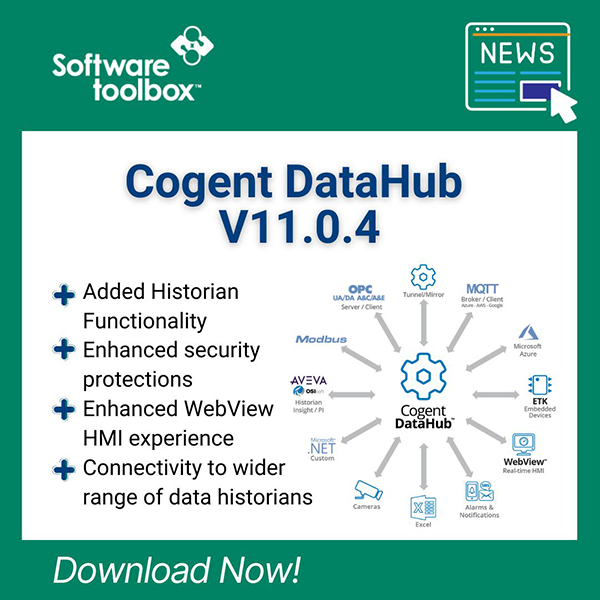

With the release of Cogent DataHub V10, multiple secure options were introduced for managing historical data, including historical data tunneling. In addition to the general tunneling benefits of streaming data securely through proxy servers and DMZs with no open inbound firewall ports, the capabilities of historical tunneling also include remotely sourcing and tunneling historical data, as opposed to real-time data, and securely delivering it to any compatible historian for logging, with built-in Store and Forwarding (more to come on that later).

Why Secure Historical Data Transfer Matters

Cogent DataHub Tunneling for historical data focuses on the secure delivery of previously collected and stored time-series data, between two historical repositories. Specifically, the DataHub Tunnel Push and Pull External Historian functionality was developed to securely and efficiently deliver historical data to historians like, AVEVA PI, InfluxDB, REST APIs and more, without requiring open inbound ports at the plant level.

We also know it is inevitable that network & internet connections break, fail, or otherwise become unreliable. The term store and forward refers to a type of database connection where the data is stored locally to disk and then later forwarded to the data destination. Cogent DataHub delivers built-in Store and Forward capabilities for Database logging, cloud connections and external historian connections.

Combining Store and Forward with the External Historian Tunneling feature creates a system that can rapidly recover from any communication failure without losing any data.

Tunneling Historical Data with Store and Forward

Just like real-time tunneling, historical data tunneling uses a Cogent DataHub instance on each end of the connection. One instance collects data from a data source and transmits it to the other instance, which then logs it to the target historian. DataHub External Historian tunnels combined with Store and Forward can be configured in multiple secure ways:

- Pushing data from the Sender to the Receiver

- Pulling data from the Sender by the receiver

- Implementing a DMZ where both pushing and pulling of data occurs.

The Sender can leverage a local InfluxDB instance that continues to buffer data if the connection between the two Cogent DataHubs is ever lost. Upon reconnection, the missing data is transmitted from InfluxDB and backfilled in your historian.

Tunnel Push

Using the Tunnel Push External Historian option, the sending Cogent DataHub instance will be configured to forward any historical values to the receiving Cogent DataHub. The historian on the sending side must be InfluxDB (which is an additional plugin and optional download when installing) or an ODBC compliant database. The historian on the receiving side can be any supported historian. This type of configuration empowers the plant floor to historize all data while requiring only an outbound port, helping to secure the network.

Tunnel Pull

Using the Tunnel Pull Historian, the receiving Cogent DataHub will pull the data from the external historian on the sending Cogent DataHub instance. It then stores the historical data in the supported external historian on the receiving side. Again, the historian on the sending side must be InfluxDB or an ODBC compliant database. This type of configuration allows you to make an outbound tunnelling connection from the receiving side, allowing flexible control of data to best fit your systems.

Maximizing Security with a DMZ

A DMZ is an effective way to provide a secure data access point for both IT and OT networks at the same time, without compromising security.

This scenario daisy chains a Tunnel Push and a Tunnel Pull through a DMZ. The Cogent DataHub instance on the sending side pushes data to the Cogent DataHub instance on the DMZ, which stores it in InfluxDB. At the same time, the Cogent DataHub instance on the receiving side pulls the data from the DMZ DataHub instance and stores it in its historian. This high-security configuration allows you to keep firewalls on both the sending and receiving sides closed. Leveraging the capabilities of tunneling with a DMZ allows interconnected data-streams between business units, without introducing networking risks.

As you can see, Cogent DataHub's External Historian Tunneling features paired with Store and Forward offers a secure and efficient solution for transferring historical data to historians, without the need to open inbound ports at either the plant or enterprise level all while maintaining historical data in a local database. This capability is crucial for organizations pursuing Industry 4.0 and digital transformation, as it enables secure and reliable OT/IT convergence. It supports the historization of IoT data to prevent data loss and allows data to be either pushed or pulled from the source or managed through a DMZ to support both methods. By minimizing cybersecurity exposure and ensuring reliable data flow, Cogent DataHub provides a robust and flexible integration layer for converged infrastructures.

Learn What’s Next

Stay tuned for a future blog post, where we will walk you through how to configure the Cogent DataHub External Historian and pair it with Store and Forward capabilities. These features increase the value of implementing tunnel solutions by preventing data gaps during those network disruptions. Combining the power of tunneling with Store and Forward creates more resilient data streams.

Ready to Secure Your Historian Data Flow?

If you want to see how this can work in your environment? Request a consultation with our team to discuss your historian integration needs and explore the best configuration for your architecture.