Cogent DataHub is known for its extensive connectivity to OPC DA, OPC UA, OPC A&E, Databases, Excel, ODBC, DDE, Linux, Modbus. The latest Version 10 release adds a huge amount of new or expanded functionality that further confirms our saying that "once the data is in DataHub, it can go anywhere".

In this blog post, I'll provide insight into key new features including support for OPC UA Alarms & Conditions, support for Sparkplug B for both the MQTT Client and Broker, support for reading and writing with multiple popular external historians and more. Not only will I cover what these new functionalities provide but how they can help you deliver secure, integrated connections within and between your plants, to the cloud and beyond, giving you the data and perspective you need to make critical decisions for your enterprise.

If you’re a current DataHub user or just looking for a solution to solve your particular integration challenges, you can take advantage of the following features in DataHub Version 10:

- Expansion of Alarms and Notification Capabilities including support for OPC UA Alarms & Conditions

- Sparkplug B Support for MQTT Client and Broker

- Store and Forward Support for Tunneling, Database, Cloud and External Historian Applications with InFluxDB

- Read/Write Integration with Many Popular External Historians

- On-Premise vs. Hosted - DataHub V10 Offers Both as a Microsoft Azure Managed Application

- Greater Configuration Scalability & Efficiency with CSV Import for Key Functionalities

So why might you need any of these features for a project? Let’s dive in and start with the one of the most powerful new features. Prefer to go hands on and see DataHub V10 in action? Click Here to Download V10

1. DataHub V10 Expands Alarms and Notification Capabilities

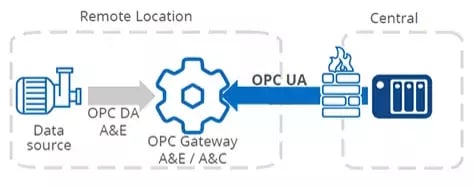

Prior to V10, DataHub has had support for OPC Classic Alarms & Events (client and server) for quite some time. With this release, DataHub now also supports the Alarms & Conditions profile for both the OPC UA client and server interfaces.

Beyond just providing connectivity for clients and servers supporting OPC UA A&C, this added functionality allows DataHub to act as a gateway between OPC A&C clients/servers and OPC Classic Alarms & Events clients/servers. This makes it easier for users to modernize legacy systems utilizing OPC Classic A&E without having to completely rip and replace existing components.

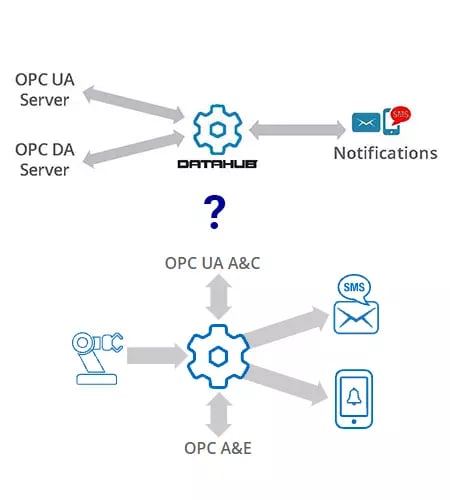

Additionally, it is now possible to create, aggregate and send OPC alarms for OPC Classic A&E and/or OPC UA A&C. This includes the availability of templates for different alarm states that can be used when configuring the desired alarms/conditions and the ability to bind to live data. These alarms/conditions can be used to trigger messages via email, SMS or social media (via Twilio) or to even run scripts to facilitate some other action. All of these functionalities equate to empowering your ability to keep key personnel fully aware in the most timely fashion of any irregular situations in your enterprise so that the appropriate actions can be taken as soon as possible to mitigate any undesirable fallout.

All of these functionalities equate to empowering your ability to keep key personnel fully aware in the most timely fashion of any irregular situations in your enterprise so that the appropriate actions can be taken as soon as possible to mitigate any undesirable fallout.

2. Sparkplug B Expands MQTT Client and Broker for Reliable Edge Connectivity

If you're not familiar with Sparkplug B for MQTT, you'll certainly find tons of resources out there going into detail on what it is with varying degrees of depth. For our purposes, Sparkplug B takes the openness of MQTT and enhances its usability and reliability by giving it some structure for greater compatibility between clients and brokers supporting it. Namely, it clearly defines topic addressing and namespace so that there's no ambiguity between clients and brokers, which ensures greater interoperability.

Additionally, to increase reliability, Sparkplug B employs a mechanism for state management that builds on the previously existing last will and testament to include the use of birth and death messages, which provide greater visibility into the current state of the connection. This is important for giving users awareness into the health of the connection so they can rely on the data being provided.

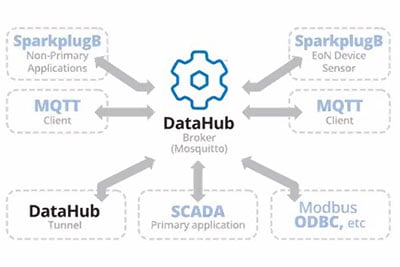

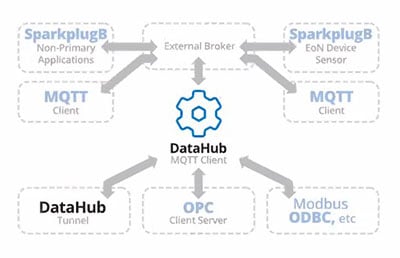

Basically, with those added functions, Sparkplug B addresses most of the common concerns and pitfalls users have expressed in the past related to the usage of MQTT for large and/or mission-critical applications. DataHub V10 supports Sparkplug B for both its MQTT client and MQTT broker and can act as a primary app, non-primary app and EoN device. V10 also expands the scalability of the MQTT broker in general with support for larger #s of connections from MQTT clients (up to 5000 connections), as well as, support for multiple JSON formats per connection for greater flexibility.

DataHub V10 supports Sparkplug B for both its MQTT client and MQTT broker and can act as a primary app, non-primary app and EoN device. V10 also expands the scalability of the MQTT broker in general with support for larger #s of connections from MQTT clients (up to 5000 connections), as well as, support for multiple JSON formats per connection for greater flexibility.

And in a world where security is a topic that is omnipresent in all of our minds, it's now possible for the DataHub MQTT broker to disable writes from any MQTT client connection, allowing you to only permit writes from fully trusted MQTT clients.

And in a world where security is a topic that is omnipresent in all of our minds, it's now possible for the DataHub MQTT broker to disable writes from any MQTT client connection, allowing you to only permit writes from fully trusted MQTT clients.

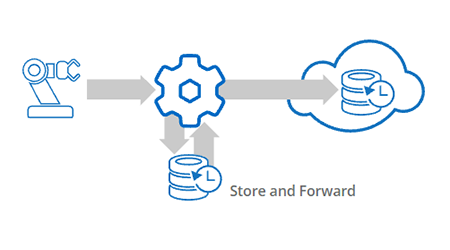

3. Store & Forward Virtually Eliminates Data Loss with DataHub V10

DataHub now installs InfluxDB v1 and supports InfluxDB v1 and v2. InfluxDB is an open-source leading time series platform developed by InfluxData that DataHub leverages for localized store and forward operations. Why is store and forward important?

For applications where data loss is unacceptable (such as in the oil and gas industry for auditing and governmental reporting), store and forward provides peace of mind that when there is a connectivity issue of any sort, data isn't lost - it's aggregated locally until the connectivity is restored. DataHub V10 provides store and forward for applications including logging of your process data to databases, DataHub Tunneling/Mirror, IIoT/Cloud applications and/or pushing your data into external historians (more on support for external historians shortly).

DataHub V10 provides store and forward for applications including logging of your process data to databases, DataHub Tunneling/Mirror, IIoT/Cloud applications and/or pushing your data into external historians (more on support for external historians shortly).

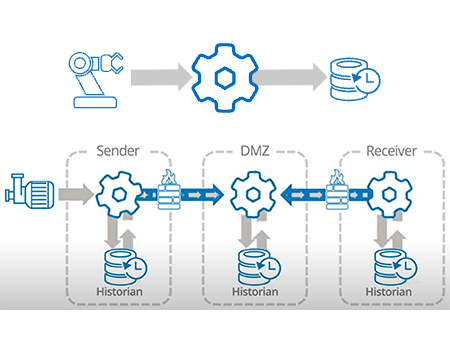

4. Integrate Historical Data Across Your Enterprise from Disparate External Sources

As I mentioned earlier, one of DataHub's core abilities is sourcing data from the many variations of OPC, databases, MQTT and more then, once the data is in the DataHub, your options for what to do with that data are many. Expanding on that theme in V10, DataHub now provides integration with the following common external historians for read and write operations:

- InfluxDB (a popular open-source time series database included with Chronograf and Grafana for creating dashboards and doing analysis)

- Amazon Kinesis (ingests and stores data streams for processing in Amazon Web Services)

- AVEVA Insight

- AVEVA Historian (formerly Wonderware Historian or InSQL)

- OSISoft PI

- External Historians with Rest APIs

Additionally, this release enables DataHub to tunnel historical data while also leveraging the new store and forward capabilities we just discussed.

Additionally, this release enables DataHub to tunnel historical data while also leveraging the new store and forward capabilities we just discussed.

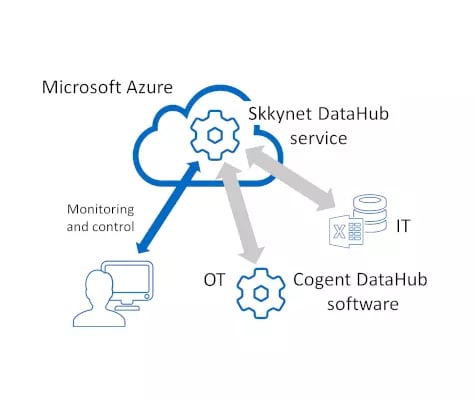

5. DataHub V10 Offers Both On-Premise or Hosted Implementation as a Microsoft Azure Managed Application

Starting with Version 10, Skkynet DataHub is now available in the Microsoft Azure Marketplace as an Azure Managed Application, optimizing secure integration of your data across your on-premise DataHub implementations. With DataHub's secure, robust tunneling functionality, this is a secure method for distributed applications needing to consolidate data. And this option can be more cost-effective implementing a full DataHub on-premise application on a full Azure hosted virtual, since cost is based only on the resources actually used. For users hesitant to go migrate to a fully hosted architecture, this option provides an economical and low risk hybrid alternative.

And this option can be more cost-effective implementing a full DataHub on-premise application on a full Azure hosted virtual, since cost is based only on the resources actually used. For users hesitant to go migrate to a fully hosted architecture, this option provides an economical and low risk hybrid alternative.

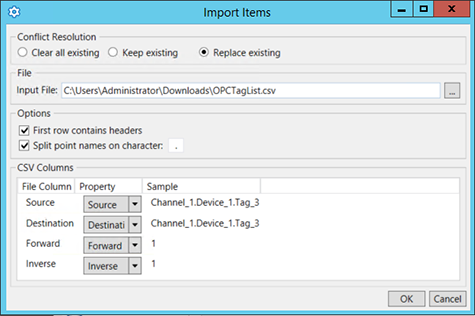

6. Expanded CSV Import Support for Greater Efficiency and Scalability

Compared to the previous new functionality we've just discuss, CSV import may seems like it doesn't belong in this list. I beg to differ. For anyone who has work on a large project with lots of moving pieces to configure, the flexibility to mass configure a CSV file for import into an application can save significant amounts of time. As they say, time is money - time saved on configuration can be spent on other tasks with greater ROI.

To that end, DataHub V10 supplements the previously added ease-of-use remote configuration abilities with CSV import support for:

- OPC UA

- OPC DA

- Bridging

- MQTT Client

- Modbus

- External Historian Connections

Now mass configuration, merging configurations or migrating configurations from one DataHub implementation to another is easier than ever.

These new features enhance the DataHub's ability to have a connected enterprise on all levels, from the smallest sensor all the way to the cloud and everywhere in between and to put your data where you need it when you need it for the greatest impact.

Use the following links for more information and to get started taking advantage of the new V10 features today:

- Get the Cogent DataHub V10 free trial

- Existing Users (Active Support/Maintenance) - Request V10 Upgrade

- Existing Users (Expired Support/Maintenance) - Request V10 Upgrade Quote

- DataHub V10 Frequently Asked Questions

- DataHub V10 Upgrade Best Practices

And don't forget to subscribe to the Software Toolbox blog to receive notifications about important announcements and resources like this in the future.