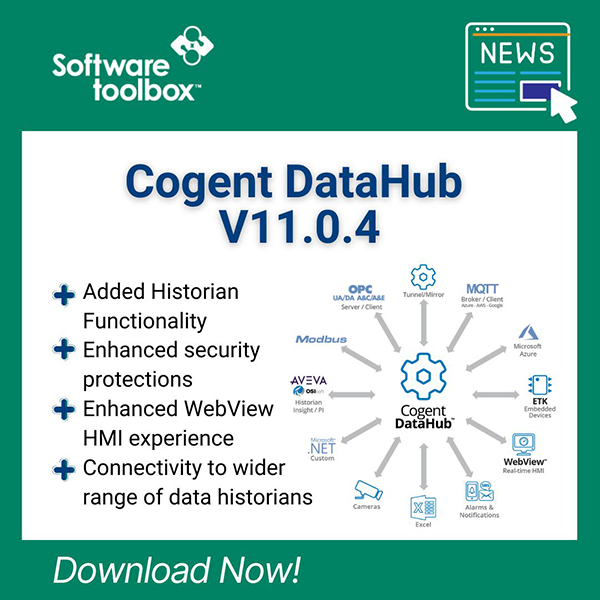

Cogent DataHub® is a gateway that enables a wide range of connectivity interfaces for different data sources such as OPC UA, OPC DA, ODBC, DDE and so much more. Included in those available interfaces is the ability to act as both an MQTT client and broker even including Sparkplug B support for enabling MQTT integration with many other types of systems that don't natively support MQTT.

A key facet of the MQTT specification that can sometimes be complex for our users and cause issues with compatibility between DataHub and other MQTT clients and brokers is how to handle the formatting of MQTT messages. As you may or may not know, while MQTT does specify how to construct a message header for routing by an MQTT broker, it does NOT specify the actual message content. This tends to be a challenge when MQTT clients from different developers need to work together, since they need to be using the same format for the message content.

In this video blog, I'll show you how to use DataHub V10's Advanced MQTT Parser capability to specify flexible formats that will work with virtually any other vendor's MQTT clients and brokers.

Watch this video to learn how to use the Advanced JSON Message Format Parser with the Cogent DataHub MQTT broker and/or client plug-ins including basic setup, which is key to interoperability between DataHub and other MQTT clients and brokers by enabling virtually any MQTT message format. For users of MQTT Sparkplug B, which actually does define a common message format, DataHub client and broker do support Sparkplug B - click here for details.

Looking for other how-to videos for DataHub? Click here!

Just to summarize, in this video, I've shown you how to use the JSON Advanced Message Formatter, specifically including:

- Adding a new JSON Schema

- Adding a Sample Input "payload" (i.e. the format of a raw JSON message being sent to or received from an MQTT broker).

- Generating a JSON Schema format from the Sample Input payload (which should generally be easy to access from any other MQTT client or broker). A "schema" is basically a framework that defines the variables in a payload and their associated properties.

- Examples of when it might be appropriate to alter the generated schema.

- Testing and editing the output / tags that will be generated by a schema

- How to exclude paths from the test output to avoid adding undesired tags from an MQTT payload.

- How to modify the tags names for the output to be more descriptive including using other information from the MQTT packet as part of the tag name and even removing parts of a JSON path that should not be included.

- How to use the timestamp included in an MQTT packet (where applicable) as the timestamp for the output tags in DataHub.

- Defining the data from the MQTT packet that will be output to the defined tags

- How to source the value, quality and timestamp information such that the defined tags are compatible with other interfaces such as OPC.

- How to convert from JSON date/time to a Date/Time format (there are other methods for converting other time formats including UNIX time). This is important because other interfaces/systems won't recognize JSON time formatting.

- Testing the output to confirm that the tag names and their data match the expected output.

- Creating templates from schemas for future use

- Testing advanced MQTT schemas using an external MQTT test client connecting to DataHub

(NOTE: While this video specifically steps through configuration with the DataHub MQTT Broker, the same formatting support applies for DataHub MQTT Client configurations, as well)

Additionally, this video was focused on the advanced message formatting capabilities included with DataHub V10 - for details on configuring MQTT connectivity beyond the message formatting in more detail, the following additional video resources will be useful:

- Interoperability between OPC UA Clients/Servers and MQTT Sparkplug B

- DataHub MQTT to Google Cloud IoT Core

- DataHub MQTT to Amazon AWS IoT Core

- DataHub as an MQTT Broker

- DataHub MQTT to Digital Twins in Azure IoT Hub

Don't forget to subscribe to our blog to find out about the latest updates to DataHub and for future how-to videos on using DataHub.

Ready to use DataHub to integrate your own MQTT clients and brokers?